I’m writing a series over at Real Time Weekly about using WebRTC on iOS, specifically to document the research we’ve been conducting in order to release a couple of iPhone apps, based on WebRTC, under AgilityFeat’s brand. One of these apps is a remote spectrum analyzer based on real-time audio streams.

In this tutorial we’ll go over the steps to build a spectum analyzer using WebGL and GLSL shaders in plain HTML and using the getUserMedia function to access the microphone.

ThreeJS and GLSL Shaders

I’ve written about shaders before on my guide to using WebGL in iOS without PhoneGap or Ionic and why they’re so useful for mobile platforms. The quick version of it is that using Vertex and Fragment Shaders forces your application to use GPU (graphics chip) rather than CPU (processor) to generate graphics.

ThreeJS provides a very simple API to pass GLSL Shaders to it. When creating 3D objects you can assign them different types of materials. One of the available materials which ThreeJS offers is the THREE.ShaderMaterial.

Shaders are written in a flavor of C++ called GLSL. Instead of writing Javascript which gets translated to GLSL calls, ThreeJS took the wise decision of interpreting GLSL shaders by accepting them as a good ol’ string.

In order to interact with the shaders, in this case for animation purposes, we use a few pre-disposed variables which ThreeJS exposes to us as properties of the ShaderMaterial.

The two properties we’ll be looking at are the attributes and the uniforms.

A couple important notes:

Shader attributes are parsed only once, which means that even though you can change their values after rendering the first frame, once rendering has begun, the shader will only use the initial value you set.

Shader uniforms on the other hand are dynamic and will be re-interpreted on each frame render.

Both attributes and uniforms are strongly typed, and even though you’ll see standard data types such as floats and integers, you’ll also see some GLSL specific types such as vec3 which are meant to be used for multi-dimensional arithmetic.

For a full set of supported data types take a look at the official list on Github:

https://github.com/mrdoob/three.js/wiki/Uniforms-types

Preparing a Spectrum GLSL Shader

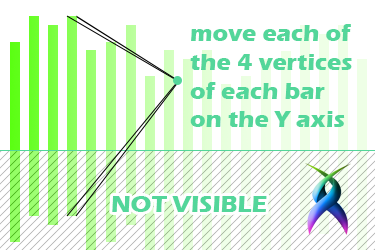

As you can see in chart above, what I’m going to do, for simplicity’s sake, is to move the bars up and down based on the frequency data, one vertex at a time, instead of resizing them or moving just the top two vertices of each bar. I talk about vertices and not shapes because shaders work like that, applying transformations to each vertex.

Let’s start with the most basic of vertex shaders, with no transformations being applied whatsoever:

THREE.SpectrumShader = {

defines: {},

attributes: {

// We'll add our attributes here

},

uniforms: {

// We'll add our uniforms here

},

vertexShader: [

"varying vec3 vNormal;",

"void main() {",

"gl_PointSize = 1.0;",

"vNormal = normal;",

"vec3 newPosition = position + normal;",

"gl_Position = projectionMatrix * modelViewMatrix * vec4(newPosition, 1.0);",

"}"

].join("\n"),

fragmentShader: [

"void main() {",

"gl_FragColor = vec4(0.0, 0.0, 0.0, 1.0);", // R, G, B, Opacity

"}"

].join("\n")

};This should be placed on a separate Javascript file, as a good practice.

So I’m going to need an attribute for each vertex to hold the maximum amount of pixels they will be pushed down, and a uniform to hold a relation from 0.0 to 1.0 which I’ll modify on a frame-to-frame basis. Later I’ll take these values and multiply them together to get the final amount I will move down the vertices of each bar.

We modify the following lines of our shader:

attributes: {

// We'll add our attributes here

},

uniforms: {

// We'll add our uniforms here

},And we’re left with:

attributes: {

"translation": { type:'v3', value:[] }, // The values are of type Vector3 so we can

// translate each vertices X, Y, and Z positions

"idx": { type:'f', value:[] } // I set this as a float value since integer attributes

// are not allowed

},

uniforms: {

"amplitude": { type: "fv1", value: [] } // This is a regular array of float values

},The idx attribute you see there has to do with the fact that WebGL is built on top of an older implementation of OpenGL and GLSL, and as such it doesn’t provide and id to know which vertex we are looking at on each iteration.

On our main Javascript we can instantiate our ShaderMaterial the following way:

material = new THREE.ShaderMaterial( THREE.SpectrumShader );Once our material is instantiated, we can access the attributes and uniforms like this:

material.attributes.translation.value.push(new THREE.Vector3(0.0, 145.0, 0.0));Drawing bars in a ThreeJS geometry

The awesome thing about using GLSL shaders is that through clever vertex-per-vertex transformations you can treat a single geometry as if it was multiple objects.

I will be drawing 50 bars on screen, but in reality I am going to be generating a single geometry which will hold 50 clusters of 4 vertices and 2 faces each, as such:

function addBar(x,width){

// Generate id on a per-bar basis

var idx = geometry.vertices.length / 4;

// Each bar has 4 vertices

geometry.vertices.push(

new THREE.Vector3( x, 0, 0 ),

new THREE.Vector3( x + width - 1, 0, 0 ), // Minus one pixel to be

new THREE.Vector3( x + width - 1, 150, 0 ), // able to differentiate bars

new THREE.Vector3( x, 150, 0 )

);

// Each bar has 2 faces

var face = new THREE.Face3( idx * 4 + 0, idx * 4 + 1, idx * 4 + 2 );

geometry.faces.push( face );

face = new THREE.Face3( idx * 4 + 0, idx * 4 + 2, idx * 4 + 3 );

geometry.faces.push( face );

// As well as two faceVertexUvs (one per face)

// Useful if applying image textures

geometry.faceVertexUvs[0].push([

new THREE.Vector2( 0, 1 ),

new THREE.Vector2( 1, 1 ),

new THREE.Vector2( 1, 0 )

]);

geometry.faceVertexUvs[0].push([

new THREE.Vector2( 0, 1 ),

new THREE.Vector2( 1, 0 ),

new THREE.Vector2( 0, 0 )

]);

// For every vertex we create a translation vector

// an id (every 4 vertices share the same id)

// and an amplitude uniform for each bar

for(var i = 0; i < 4; i++){

material.attributes.translation.value.push(new THREE.Vector3(0.0, 145.0, 0.0));

material.attributes.idx.value.push(idx);

if ((i % 4) === 0) material.uniforms.amplitude.value.push(1.0);

}

}

// Generating 50 bars across a length of 250 pixels

for(var i = 0; i < 50; i += 1){

addBar( -((10 * i) - 250) - 10, 10 );

}Pull audio from microphone using WebRTC

Now, on to the WebRTC of it all, we need to fetch the audio from the microphone:

function start_mic(){

if (navigator.getUserMedia) {

navigator.getUserMedia({ audio: true, video: false }, function( stream ) {

// The audio context and the analyser pull the audio data and

// allow us to store it in an array of frequency volumes

audioCtx = new (window.AudioContext || window.webkitAudioContext)();

analyser = audioCtx.createAnalyser();

// Our source is our WebRTC Audio stream

source = audioCtx.createMediaStreamSource( stream );

source.connect(analyser);

analyser.fftSize = 2048;

bufferLength = analyser.frequencyBinCount;

// We will pull the audio data for each bar from this array

dataArray = new Uint8Array( bufferLength );

// Let's begin animating

animate();

}, function(){});

} else {

// fallback.

}

}and feed it to the shader through the amplitude uniform:

function animate(){

requestAnimationFrame( animate );

analyser.getByteFrequencyData(dataArray);

for(var i = 0; i < 50; i++) {

material.uniforms.amplitude.value[i] = -(dataArray[(i + 10) * 2] / 255) + 1;

};

render();

}Using our GLSL Shader to Animate the Spectrum Bars

Everything we've done so far is fine and dandy, but we need to tell the shader what to do with the audio data.

Every vertex shader is composed at least by 3 positional elements. The projection matrix (the position of a vertex relative to the camera), the model view matrix (the position of a vertex relative to the object it belongs to), and the position matrix (the position of a vertex relative to the screen).

In this case we are going to be altering the position matrix:

vertexShader: [

"attribute vec3 translation;",

"attribute float idx;",

"uniform float amplitude[ 50 ];",

"varying vec3 vNormal;",

"varying float amp;",

"void main() {",

"gl_PointSize = 1.0;",

"vNormal = normal;",

"highp int index = int(idx);",

"amp = amplitude[index];",

"vec3 newPosition = position + normal + vec3(translation * amp);",

"gl_Position = projectionMatrix * modelViewMatrix * vec4(newPosition, 1.0);",

"}"

].join("\n"),As promised, you can see how it all (the attributes and uniform) come together on these lines:

"amp = amplitude[index];",

"vec3 newPosition = position + normal + vec3(translation * amp);",The newPosition vector holds the new Y coordinate based on the amplitude uniform we are passing it (basically the volume of the frequency this bar represents).

The final shader looks like this:

THREE.SpectrumShader = {

defines: {},

attributes: {

"translation": { type:'v3', value:[] },

"idx": { type:'f', value:[] }

},

uniforms: {

"amplitude": { type: "fv1", value: [] }

},

vertexShader: [

"attribute vec3 translation;",

"attribute float idx;",

"uniform float amplitude[ 50 ];",

"varying vec3 vNormal;",

"varying float amp;",

"void main() {",

"gl_PointSize = 1.0;",

"vNormal = normal;",

"highp int index = int(idx);",

"amp = amplitude[index];",

"vec3 newPosition = position + normal + vec3(translation * amp);",

"gl_Position = projectionMatrix * modelViewMatrix * vec4(newPosition, 1.0);",

"}"

].join("\n"),

fragmentShader: [

"varying float amp;",

"void main() {",

"gl_FragColor = vec4(-(amp) + 1.0, 0.5 + (amp/2.0), 0.0, 1.0);",

"}"

].join("\n")

};As you can see on the fragmentShader I reuse the amp variable to get a nice fade to orange as the volume of a bar reaches its max level. The sharing of variables between shaders is possible due to the varying keyword.

As always, the code for this demo is available on github:

https://github.com/agilityfeat/WebRTC-Spectrum-Demo/tree/master

Be sure to drop me a line ifyou find this info helpful.

Happy coding!

Comentarios recientes